[ad_1]

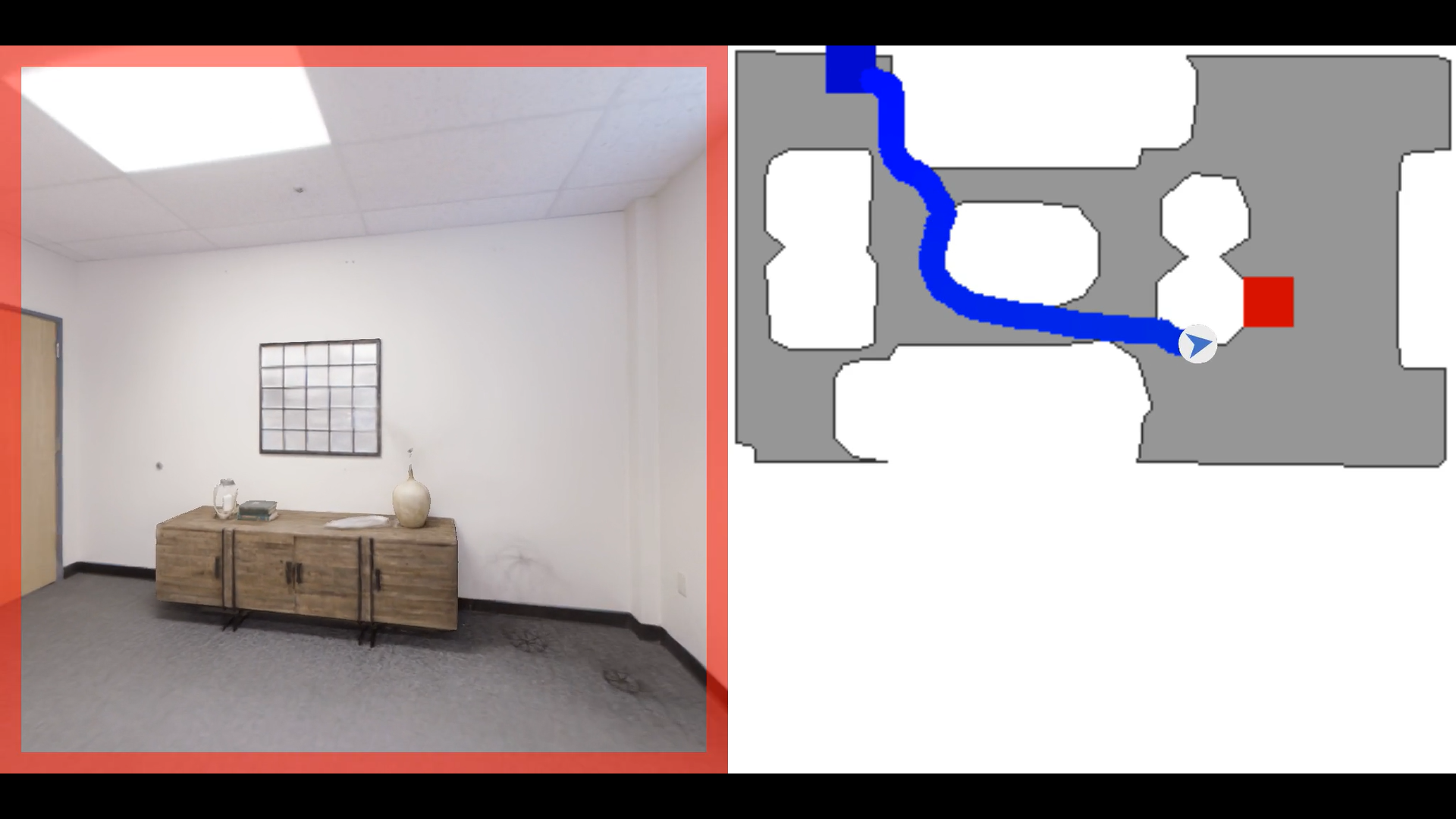

Facebook Reality Labs (which up until last year was Oculus Research) created a new open platform for embodied AI research called AI Habitat, along with a dataset of photorealistic sample spaces it’s calling Replica. Both Habitat and Replica are now available for researchers to download on Github. With these tools, researchers can train AI bots to act, see, talk, reason and plan simultaneously. The Replica data set is made of 18 different sample spaces, including a living room, conference room and two-story house. By training an AI bot to respond to a command like “bring my keys” in a Replica 3D simulation of a living room, researchers hope someday it can do the same with physical robots in a real-life living room.

A Replica simulation of a living room is meant to capture all the subtle details one might find in a real living room, from the velour throw on the sofa to the reflective decorative mirror on the wall. The 3D simulations are photo-realistic; even surfaces and textures are captured in sharp detail, something Facebook says is essential to training bots in these virtual spaces. “Much as the FRL research work on virtual humans captures and enables transmission of the human presence, our reconstruction work captures what it is like to be in a place; at work, at home, or out and about in shops, museums, or coffee shops,” said Richard Newcombe, a research director at Facebook Reality Labs, in a blog post.

Some researchers have already taken Replica and AI Habitat for a test-drive. Facebook AI recently hosted an autonomous navigation challenge on the platform. The winning research team will be announced Sunday at this year’s Conference on Computer Vision and Pattern Recognition (CVPR).

[ad_2]

Source link